Heat I: Sensing, Measuring and Understanding Temperature

Michael Fowler

Feeling and seeing temperature changes

Within some reasonable temperature range, we can get a rough idea how warm something is by touching it. But this can be unreliable—if you put one hand in cold water, one in hot, then plunge both of them into lukewarm water, one hand will tell you it’s hot, the other will feel cold. For something too hot to touch, we can often get an impression of how hot it is by approaching and sensing the radiant heat. If the temperature increases enough, it begins to glow and we can see it’s hot!

The problem with these subjective perceptions of heat is that they may not be the same for everybody. If our two hands can’t agree on whether water is warm or cold, how likely is it that a group of people can set a uniform standard? We need to construct a device of some kind that responds to temperature in a simple, measurable way—we need a thermometer.

The first step on the road to a thermometer was taken by one Philo of Byzantium, an engineer, in the second century BC. He took a hollow lead sphere connected with a tight seal to one end of a pipe, the other end of the pipe being under water in another vessel.

To quote Philo: “…if you expose the sphere to the sun, part of the air enclosed in the tube will pass out when the sphere becomes hot. This will be evident because the air will descend from the tube into the water, agitating it and producing a succession of bubbles.

Now if the sphere is put back in the shade, that is, where the sun’s rays do not reach it, the water will rise and pass through the tube …”

“No matter how many times you repeat the operation, the same thing will happen.

In fact, if you heat the sphere with fire, or even if you pour hot water over it, the result will be the same.”

Notice that Philo did what a real investigative scientist should do—he checked that the experiment was reproducible, and he established that the air’s expansion was in response to heat being applied to the sphere, and was independent of the source of the heat.

Some Classic Dramatic Uses of Temperature-Dependent

Effects

Some Classic Dramatic Uses of Temperature-Dependent

Effects

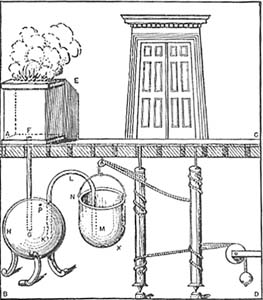

This expansion of air on heating became widely known in classical times, and was used in various dramatic devices. For example, Hero of Alexandria describes a small temple where a fire on the altar causes the doors to open.

The altar is a large airtight box, with a pipe leading from it to another enclosed container filled with water. When the fire is set on top of the altar, the air in the box heats up and expands into a second container which is filled with water. This water is forced out through an overflow pipe into a bucket hung on a rope attached to the door hinges in such a way that as the bucket fills with water, it drops, turns the hinges, and opens the doors. The pipe into this bucket reaches almost to the bottom, so that when the altar fire goes out, the water is sucked back and the doors close again. (Presumably, once the fire is burning, the god behind the doors is ready to do business and the doors open…)

Still, none of these ingenious devices is a thermometer. There was no attempt (at least none recorded) by Philo or his followers to make a quantitative measurement of how hot or cold the sphere was. And the “meter” in thermometer means measurement.

The First Thermometer

Galileo claimed to have invented the first thermometer (although this is the subject of much debate). Well, actually, he called it a thermoscope, but he did try to measure “degrees of heat and cold” according to a colleague, and that qualifies it as a thermometer. (Technically, a thermoscope is a device making it possible to see a temperature change, a thermometer can measure the temperature change.) Galileo used an inverted narrow-necked bulb with a tubular neck, like a hen’s egg with a long glass tube attached at the tip.

He first heated the bulb

with his hands then immediately put it into water. He recorded that the water

rose in the bulb the height of “one palm”. Later, either Galileo or his

colleague Santorio Santorio put a paper scale next to the tube to read off

changes in the water level. This definitely made it a thermometer, but who

thought of it first isn’t  clear (they argued about it). And, in fact, this

thermometer had problems.

clear (they argued about it). And, in fact, this

thermometer had problems.

Question: what problems? If you occasionally top up the water, why shouldn’t this thermometer be good for recording daily changes in temperature?

Answer: because it’s also a barometer! But—Galileo didn’t know about the atmospheric pressure.

Torricelli, one of Galileo’s pupils, was the first to realize, shortly after Galileo died, that the real driving force in suction was external atmospheric pressure, a satisfying mechanical explanation in contrast to the philosophical “nature abhors a vacuum”. In the 1640’s, Pascal pointed out that the variability of atmospheric pressure rendered the air thermometer untrustworthy.

Liquid-in-glass thermometers were used from the 1630’s, and they were of course insensitive to barometric pressure. Meteorological records were kept from this time, but there was no real uniformity of temperature measurement until Fahrenheit, almost a hundred years later.

Newton’s Anonymous Table of Temperatures

The first systematic account of a range of different temperatures, “Degrees of Heat”, was written by Newton, but published anonymously, in 1701. Presumably he felt that this project lacked the timeless significance of some of his other achievements.

Taking the freezing point of water as zero, Newton found the temperature of boiling water to be almost three times that of the human body, melting lead eight times as great (actually 327C, whereas 8x37=296, so this is pretty good!) but for higher temperatures, such as that of a wood fire, he underestimated considerably. He used a linseed oil liquid in glass thermometer up to the melting point of tin (232°C). (Linseed oil doesn’t boil until 343°C, but that is also its autoignition temperature!)

Newton tried to estimate the higher temperatures indirectly. He heated up a piece of iron in a fire, then let it cool in a steady breeze. He found that, at least at the lower temperatures where he could cross check with his thermometer, the temperature dropped in a geometric progression, that is, if it took five minutes to drop from 80° above air temperature to 40° above air temperature, it took another five minutes to drop to 20° above air, another five to drop to 10° above, and so on. He then assumed this same pattern of temperature drop was true at the high temperatures beyond the reach of his thermometer, and so estimated the temperature of the fire and of iron glowing red hot. This wasn’t very accurate—he (under)estimated the temperature of the fire to be about 600°C.

Fahrenheit’s Excellent Thermometer

The first really good thermometer, using mercury expanding from a bulb into a capillary tube, was made by Fahrenheit in the early 1720’s. He got the idea of using mercury from a colleague’s comment that one should correct a barometer reading to allow for the variation of the density of mercury with temperature. The point that has to be borne in mind in constructing thermometers, and defining temperature scales, is that not all liquids expand at uniform rates on heating—water, for example, at first contracts on heating from its freezing point, then begins to expand at around forty degrees Fahrenheit, so a water thermometer wouldn’t be very helpful on a cold day. It is also not easy to manufacture a uniform cross section capillary tube, but Fahrenheit managed to do it, and demonstrated his success by showing his thermometers agreed with each other over a whole range of temperatures. Fortunately, it turns out that mercury is well behaved in that the temperature scale defined by taking its expansion to be uniform coincides very closely with the true temperature scale, as we shall see later.

It should be mentioned that a little earlier (1702) Amontons introduced an air pressure thermometer. He established that if air at atmospheric pressure (he states 30 inches of mercury) at the freezing point of water is enclosed then heated to the boiling point of water, but meanwhile kept at constant volume by increasing the pressure on it, the pressure needed increases by about 10 inches of mercury. This was not widely adopted as a practical temperature measuring device, but was a crucial experiment is establishing the Gas Law, and we'll discuss it in detail in a later lecture.

Thermal Equilibrium and the Zeroth Law of Thermodynamics: What is Temperature?

Once the thermometer came to be widely used, more precise observations of temperature and (as we shall see) heat flow became possible. Joseph Black, a professor at the University of Edinburgh in the 1700’s, noticed that a collection of objects at different temperatures, if brought together, will all eventually reach the same temperature.

As he wrote, “By the use of these instruments [thermometers] we have learned, that if we take 1000, or more, different kinds of matter, such as metals, stones, salts, woods, cork, feathers, wool, water and a variety of other fluids, although they be all at first of different heats, let them be placed together in a room without a fire, and into which the sun does not shine, the heat will be communicated from the hotter of these bodies to the colder, during some hours, perhaps, or the course of a day, at the end of which time, if we apply a thermometer to all of them in succession, it will point to precisely the same degree.”

We say nowadays that bodies in “thermal contact” eventually come into “thermal equilibrium”—which means they finally attain the same temperature, after which no further heat flow takes place. This is equivalent to:

The Zeroth Law of Thermodynamics:

If two objects are in thermal equilibrium with a third, then they are in thermal equilibrium with each other.

The “third body” in a practical situation is just the thermometer.

It’s perhaps worth pointing out that this trivial sounding statement certainly wasn’t obvious before the invention of the thermometer. With only the sense of touch to go on, few people would agree that a piece of wool and a bar of metal, both at 0°C, were at the same temperature.

Temperature is a Potential for Heat Flow

So this is how to understanding temperature: Black observed that things in thermal contact eventually reach a common temperature, just a connected bodies of water will come to the same level if there is no exterior source or sink. In other words, in analyzing heat flow, we can understand temperature as a sort of potential. Just as gravitational potential difference will cause water to flow between connected lakes at different levels, temperature difference between thermally connected objects causes heat to flow.

Books I used in preparing this lecture:

A Source Book in Greek Science, M. R. Cohen and I. E. Drabkin, Harvard university Press, 1966.

A History of the Thermometer and its Uses in Meteorology, W. E. Knowles Middleton, Johns Hopkins Press, 1966.

A Source Book in Physics, W. F. Magie, McGraw-Hill, New York, 1935.